Introduction

Solving the ‘Scalability Trilemma’ is the Holy Grail of crypto but with it comes numerous misconceptions. Many cryptocurrency projects now claim to have solved the blockchain trilemma, when in fact, they have not. A cryptocurrency that adequately solves the trilemma would truly be able to provide enormous value to the world, which is why it is such a coveted trophy.

What Is The Scalability Trilemma?

Security, decentralisation, and capacity; you get to pick two, or so says the trilemma. The concept was coined by the now famous cryptocurrency leader, Vitalik Buterin. In essence, you must sacrifice one feature for the sake of the other two. More specifically, he stated that if you only use “simple” techniques you can achieve two of these features. I’ll come back to this concept of “simple” later. Let’s define exactly what these features are:

Scalability: The chain can process enough transactions for the whole world on a single regular node, and without requiring that, users must pay high fees to make use of it.

Decentralisation: The network can run without any trusted groups or entities and without a small set of large, centralized actors. Someone should be able to make use of the network without having to worry that an entity is able to censor their transactions.

Security: The network is required to function properly even if a large malicious entity is trying to attack it. A user of the network must be able to receive a transaction without worrying that they are at risk of fraud being committed against them.

Misconceptions: Block Size

There are a lot of incorrect assumptions found scattered around the crypto community, even in some cryptocurrency whitepapers. The false assumption is this:

Bitcoin-like cryptocurrencies cannot scale because as you increase the number of transactions, blocks increase in size proportionally. This increases the amount of time it takes to propagate these blocks across the network. As you increase propagation time of blocks, you increase the quantity of orphaned blocks. This acts as a centralisation pressure as more closely connected miners, and larger miners, have an advantage over more poorly connected and smaller miners. The larger miners will suffer less from orphans and will therefore be more profitable.

Absent any further details, while the above is true, and is certainly true for Bitcoin, it is a false assumption for Nexa. Nexa blocks are not proportional in data size to the number of transactions in them and it will be explained later in the article how this is true.

High BPS = High Scalability

Firstly, blocks per second isn’t a scalability metric because a block can have an arbitrary number of transactions within it. To measure capacity, we need a value that allows us to make relevant comparisons between blockchain networks. The best value for this is TPS (transactions per second). While technically the most accurate measurement would be data per second, this is not particularly human- orientated. We want to know realistically how many people can use the network and TPS is a great measure for this.

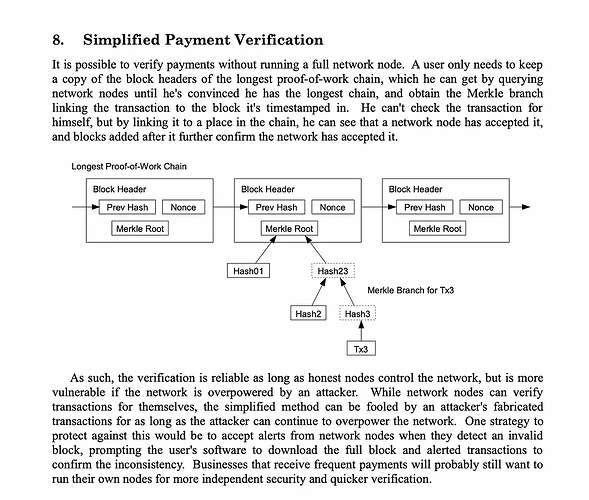

A large number of blocks per second can actually harm not only scalability but also decentralisation. If a blockchain network has a very high number of blocks per second, it means that it becomes infeasible for a lite wallet, such as a mobile device, to use SPV (simple payment verification) technology.

SPV allows non-full-nodes to still cryptographically verify that a payment has been included in the blockchain via SPV proofs and the full blockchain of headers. The full blockchain of headers provide a perfect chain all the way back to genesis proving that it is the chain with the most proof-of-work. The SPV proof proves that a specific transaction has been included into an existing block within the blockchain. Without this proof, the data provider to a lite-wallet can easily provide false information about a transaction that is supposedly in the blockchain, when in fact it is not. For example, it could say that the user has received a payment when they actually have not. It creates a trust relationship between the data provider and the user of a lite wallet, when cryptocurrencies should be trustless to avoid fraud. This technology is key to maintaining trustlessness and decentralisation while scaling to billions of users.

Security — Decentralisation — Capacity

The Problem

What we want is to maximise security, decentralisation, and capacity all at the same time to solve the trilemma and create the ultimate cryptocurrency.

But how to do this? The easiest to explain is security as this remains unchanged from Bitcoin. Miners compete for the block reward by cycling through potential solutions to the proof-of-work problem. I won’t go deep into this as it is a generally well understood part of cryptocurrency systems.

What would happen if we massively increased the size of blocks on Bitcoin as it was originally built? As the size of blocks scaled with capacity, the amount of time to propagate a block across the network would increase proportionally, i.e., it would take ten times as long to send ten times as much data. As blocks reach a size where propagation is so slow that multiple blocks regularly compete for the same block reward, this would temporarily split the network until one side of the split eventually won out. We call the blocks that lose these splits “orphans”. This does already occur in Bitcoin, but with large enough blocks this would become a persistent problem. In addition, this problem actually creates a centralisation pressure as block sizes reach a point where orphans are common. As touched on earlier, miners that are better connected in the network gain an advantage over miners who are less well connected. When a high orphan rate favours larger miners, the network becomes more centralised.

Existing Solutions

Some DAG-based cryptocurrencies solve this by allowing miners to point their blocks to multiple older blocks in the chain making it so there is no need for orphans as the chain does not need to be sequential. Ethereum solves this by still paying some of the block reward to orphans. While these do solve the centralisation pressure caused by orphans, and alleviate some centralisation pressure, they do not solve scaling.

Nexa’s Solution:

If that’s the case, then how does Nexa solve this issue? The first point to make is that there is much more to scaling than simply solving the orphan/ bandwidth problem. You also need to solve the storage problem and the validation speed problem, but let’s tackle the orphan problem first.

Solving The Orphan Problem

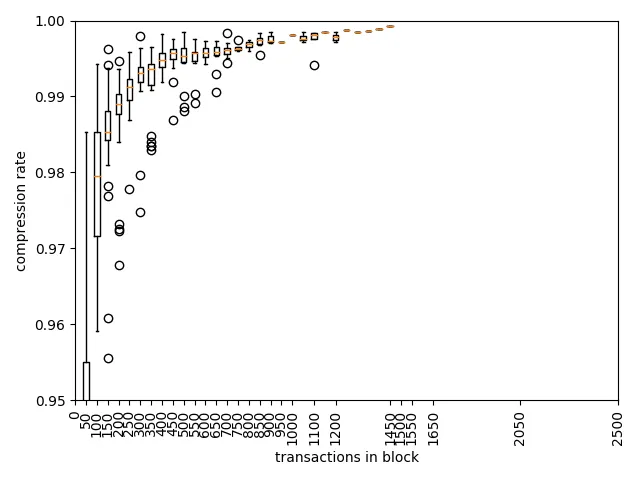

The incorrect assumption found across the crypto industry is that the amount of data that must be propagated per block is equal to the size of the header plus all the transactions in that block. This is false. Nexa currently only transfers 1% of this data to communicate each block. Whaaa? Yes, Nexa makes use of some very clever block compression technology called ‘Graphene’.

Graphene uses a key property of the network for scaling where nodes in the network already have, essentially, all the transaction data in their mempools. When a transaction is sent for a payment, it is propagated within seconds to the whole network. It is incredibly wasteful then, to re-transmit all that data a second time when a block solution is found. But how can nodes know specifically what transactions are going into a block from the mempool? This is where Graphene comes in. Graphene is a very clever technology to communicate between nodes how two sets of data differ from each other. The data needed to convey the differences between two mempools is considerably less than the data required to convey a block, enormously less data in fact. Even with small blocks, the data saving is 99%. The incredible thing about Graphene however, is that it gets more efficient as the blocks grow in size. This is because the mempools actually overlap more by percentage over time as the blocks grow in size.

While I am unsure of the exact numbers for much larger blocks, it may enable something like a 1000MB block to be propagated across the network using only 1MB of data or less. This solves the orphan problem.

This only works because block times are not incredibly low. With a much lower block interval, for example one second, the amount of overlap of each miner’s mempool becomes much lower. This is because transactions take time to propagate across the network and during the propagation time, some nodes will have the transaction while others will not. This breaks the assumption of Graphene, that all nodes have almost identical mempools at the time a block solution is found.

Nexa actually has one more trick up its sleeve with Graphene. Nodes can actually use graphene before a block solution is found. Nodes can occasionally use Graphene to poll each other (for example every 30 seconds) to check how well synced their mempool is. This means they can pre- sync their mempool to increase the compression even further when a block solution is found.

Above are the results of research into the rate of compression provided by Graphene as blocks increase in size. As you can see, compression approaches 100% as the block size increases, meaning that orphan rates will remain low as blocks on Nexa increase enormously in size.

What this ultimately means is that the significant majority of data that needs to be sent across the network is just the transactions as they are created. For 100,000TPS, this is a data rate of 20MB/s which is already below many common internet connections.

Solving The Storage Problem

BU is working on a new UTXO commitment upgrade which will mean that miners will only ever need the UTXO set instead of the full blockchain. This will make joining the network much faster and the growth of the UTXO size should be linear (especially with the UTXO consolidation incentives we are considering). Solid state storage is decreasing in cost exponentially and a 1TB SSD has already achieved a cost of below ~$50. This is enough space for about 20 billion UTXOs. By the time we have achieved global adoption, that $50 of storage space will more likely be 200–400 billion UTXOs. Nodes would be able to afford much more than $50 for storage given the enormous transaction volume and fees they can collect. To put that in perspective, Bitcoin currently has about 80 million UTXOs and is estimated to have about the same number of ’users’. A 1TB SSD could handle 250x the size of bitcoin UTXO set and therefore, about 20 billion users. Or it could handle 8 billion users with a few times more UTXOs.

That’s with today’s technology and without any off-chain scaling tricks.

Solving The Validation Problem

This is where Nexa really comes into its own. A full-node needs to do a variety of things to participate in the network. One of these is to validate transactions to make sure they are all following the consensus rules (e.g., no double-spends). To do so, it needs to do a few things including:

- Checking that the amount of NEX in the inputs does not exceed the amount of NEX in the outputs.

- Working through the script to check that it correctly validates.

- Checking the signatures provided are valid.

- Checking that the inputs are using valid UTXOs and are therefore not double- spends.

Some parts of these functions require more computation than others, a lot more in fact. Specifically, SHA256 hashing, signature verification, and UTXO lookups all require much more time to complete than other parts of this process. This is because all of these functions are handled in generalised hardware, i.e, the CPU.

SHA256 Hashing

SHA256 hashing is a key technology underlying most cryptocurrencies, including Nexa. A hash is a digital cryptographic fingerprint for a specific piece of information. There are a number of places in Nexa where hash functions are used, including in transactions and in the mining algorithm. The change in the financial and energy cost between now and the launch of Bitcoin is the key concept behind Nexa’s scaling strategy.

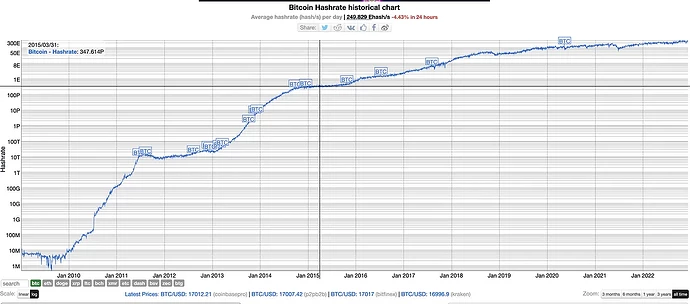

Looking at this logarithmic chart of Bitcoin’s network hashrate is the best example of this scaling. During the first year of Bitcoin’s existence, the network was capable of doing a total of a few million hashes per second with a single CPU being capable of perhaps ten thousand hashes per second or less. Today, the network is running 300 Exahashes per second and a single mining machine is capable of over 100TH/s. That is a 10,000,000,000x improvement in the speed of hashing per mining machine. It is unfathomable scaling and shows the incredible power of custom hardware.

These custom ASICs can be used to improve the speed of doing a SHA256 hash on your desktop computer 10 billion times, and nodes must do this operation for every transaction and often many times per transaction. By using customised hardware to speed up this operation, this bottleneck is removed.

Signature Verification

Signature verification is another compute- heavy operation that Nexa nodes must do at least once for every transaction. Cryptographic signatures are a key part of transactions and smart contracts on Nexa but they are a big bottleneck.

The NexScale POW forces miners to process both SHA256 hashes and Schnorr signatures. Those huge scaling incentives we have seen applied to bitcoin hashing is now also applied to the Schnorr signatures found in Nexa.

A single RTX 4090 is now processing 220,000,000 signatures per second on Nexa. Over time this algorithm will be pushed into ever faster, more efficient, and cheaper hardware (i.e. FPGAs and eventually ASICs).

See this video for more details:

https://youtu.be/pDRCWcw5sAU

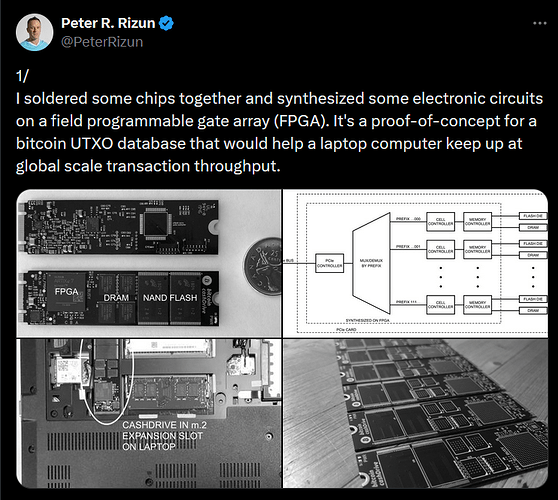

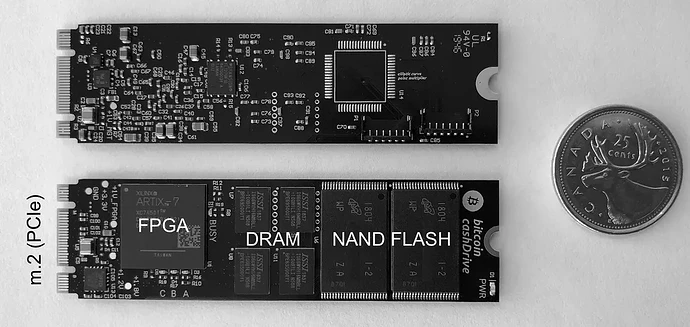

UTXO Lookups

The final part of Nexa’s scaling strategy is the UTXO lookup. This is also going to be put into the NexScale POW in a future protocol upgrade. This will require miners to do very fast, randomized lookups across the whole UTXO database to process the NexScale algo. Peter Rizun is already working on hardware to do this. See our resident Chief Scientist discussing his ideas and prototypes on X:

https://x.com/PeterRizun/status/1247554984968777729?s=20

Cashdrive has no software — 0 lines of code. The UTXO database is controlled with combinational logic gates and flip flops. It scales horizontally with hardware cells that operate in parallel, limited by the bandwidth of the PCIe bus, and economically by the price of memory.

For outputs that are stored in flash, throughput is limited by the ~100 us random page access time of the NAND flash dies. Each die has a dedicated controller on the FPGA for parallel access. 64 dies (4 x 2 Tbit flash chips) fit on a m.2 card, for a peak throughput >100k TPS.

The CashDrive prototype above produced in 2020 is already able to store 500GB of UTXOs, which is enough to store 10 billion UTXOs. Future iterations of this technology will enable significant improvements in both cost and capabilities. What this will achieve is some low-cost hardware that a full-node operator can put into their machine. This would allow them to store and process the full UTXO set of Nexa even if every person in the world is using it.

Bonus Feature: Programmability

Not only does Nexa fully solve the scalability trilemma, and enable everyone in the world to use the network at low-cost and high reliability, but it also provides a deep level of programmability. While this level of programmability does not reach the level of “Turing completeness”, it does enable practically all use cases that are found on EVM networks, and does so without weakening the systems integrity while scaling.

Nexa make uses of the idea of the Pareto Principle to provide the smart-contract primitives necessary to produce enormous value while remaining scalable. Nexa uses resources wisely which is why we say that Nexa has ‘wise-contracts’.

Each UTXO on Nexa is a self-contained smart-contract which can be evaluated on its own and validated in parallel with all other transactions in the mempool. Nexa wise-contracts can also be practically unlimited in complexity when building multi-UTXO architectures. Nexa also uses the Pareto Principle in another area, the op_code and script_code primitives. As the network scales and it becomes clear which features and use cases are becoming popular, the protocol has space to adopt any op_codes and script_codes to make them “official” for better efficiency and easier smart-contract design.

This is what will enable Nexa to compete with EVM functionality while maintaining scalability.

Summary

Nexa provides a cryptocurrency network capable of supporting all of humanity. This is done without causing centralization by removing the bottlenecks through huge software and hardware efficiency improvements. Nexa does this while simultaneously providing advanced financial functionality in the form of efficient, robust, and transparent smart-contracts as well as native tokenization.