You could verify the world’s transactions on the head of a pin, if you stop thinking in software loops and start thinking in silicon. Sounds like a punchline, but it’s not, this idea sits at the core of what we’re building with Nexa. Not a theory, not a whitepaper dream, real infrastructure, grounded in physics, moving crypto toward a serious, industrial-grade scale.

Nexa isn’t just a fast blockchain or a low-fee network, it’s not some slogan about being better than Bitcoin or cheaper than Ethereum, it’s the first cryptocurrency actually designed to scale in hardware, that means it plays well with silicon, it works in parallel, it validates the way machines are meant to compute, smart contracts, native tokens, instant transactions—those are the things people see, but the real shift is in the backend. It’s the realization that instead of asking CPUs to grind through cryptographic math, we can hand the job to dedicated circuits, real logic, real transistors, and suddenly everything gets faster, smaller, lighter.

What’s Actually Holding Everyone Back

SHA-256 got optimized into the ground a decade ago, ASICs pushed it from desktop mining to global scale, squeezing out all the waste, but while hashing is fine, the rest of the system didn’t follow, every transaction in crypto needs a signature check and that’s not optional. If you want to prove you own your coins, you need to verify a digital signature, that means elliptic curve math, and that means computation. Now picture a world where five billion people use Nexa like cash, two transactions a day per person, that’s ten billion signature checks in twenty-four hours or 100,000 per second, and every full node has to verify those, live. Even the best ones barely get through twenty, maybe thirty thousand signature checks per second per core, that means burning silicon, spinning up server racks, wasting electricity. The only way this works is if we stop trying to optimize slow loops and start putting the math into hardware.

Why Nexa Fits This Future

Most chains don’t scale like this, because they weren’t made for it, legacy architecture, mempool backlogs, tiny block sizes and consensus tweaks that do more harm than good. Nexa is different, it was designed to operate without arbitrary limits, signature-based double-spend proofs, instant transactions. All this creates a setup that’s actually friendly to parallel processing, what that means is: we can pipeline, we can batch, we can push the heavy math into hardware cores and let them do what they do best, raw computation, fast and cheap. Not just for hashing, but for everything from ECDSA verification to modular multiplications, and it’s not a dream, it’s engineering. When people talk about scalability, they usually mean consensus tweaks, fancy L2s, or stuffing more data into blocks. At Nexa, we’re talking about something else, we’re talking about hardware-level scaling, because it’s not just about speed, it’s about architecture.

What the Hardware Actually Looks Like

Modern chips are dense, on 5 nm silicon, you can fit about 170 million transistors into a square millimeter, for a dedicated verifier core, one that does nothing but check ECDSA signatures, we only need about 5 million. That means 34 verifier cores on something smaller than your fingernail, and each core can handle about 200,000 signature checks per second. That’s 6.8 million verifications per second from a chip the size of a pinhead. With five or six of those, you’re supporting the whole planet, and the power draw is tiny. It’s not a server rack, it’s a component, this is where crypto stops being bulky, and starts being invisible. Embedded into devices, routers, phones, laptops, and other infrastructure, if we’re being ambitious. This is what happens when the protocol is designed from the start to align with how real chips work.

Script Is the Last Bottleneck

One thing still stands in the way of full-speed acceleration: script execution. Bitcoin Script was never meant to be run on hardware, it’s stack-based, hard to compile, full of edge cases, and impossible to pipeline cleanly. You can’t just feed it into a logic block and go, at Nexa, we’re looking at RISC-V instead. That’s a real instruction set, built for hardware. Open source, modular, and made to be implemented in silicon, with RISC-V, we can write contract logic that actually compiles, gets optimized, and runs predictably. More importantly, it makes smart contracts something you can run at the edge, in hardware, not just on big nodes, because once you move validation into the chip, everything else gets lighter. Easier to audit, easier to sandbox, easier to secure, that’s the world we want.

The Bigger Picture

What Dr. Peter Rizun is doing here isn’t just about performance, it’s about what decentralization actually means when hardware gets involved. If full validation can happen on a tiny chip, you don’t need data centers, you don’t need trust, you don’t need infrastructure, all you need is just something the size of a postage stamp, doing real work, verifying every transaction live. This is decentralization without compromise, and it’s what crypto was supposed to be from the start. Below, we invite you to dive into an article based on Dr. Peter Rizun’s presentation on hardware acceleration for cryptocurrency.

Dr. Peter Rizun’s Presentation: An Introduction to Hardware Acceleration for Cryptocurrency

1. Introduction: The Inevitable Shift to Hardware

At the intersection of cryptography, computer architecture, and economic systems lies a critical bottleneck that could define the future of global-scale digital currency: computational scalability. In his talk “An Introduction to Hardware Acceleration for Cryptocurrency”, Dr. Peter Rizun advances a compelling and pragmatic thesis: that the long-term viability of Bitcoin, and blockchain technologies more broadly, depends on transitioning core cryptographic operations from software-based execution to custom-designed digital hardware.

Dr. Peter Rizun opens with two ideas, each aimed squarely at current cryptocurrency orthodoxy. First, he asserts that specialized hardware is not merely an interesting performance optimization, it is inevitable for high-throughput, energy-efficient cryptographic computation. Second, he questions the continued reliance on Bitcoin Script, a deliberately restricted, stack-based scripting system used to define spending conditions for transactions. Instead, Dr. Peter Rizun proposes a RISC-V-based alternative, designed from the ground up for efficient hardware implementation. These two ideas form the thematic backbone of his presentation, the first relates to computational performance: how we execute cryptographic tasks like hashing and signature verification, and the second concerns computational expressiveness, and compatibility: how we define the rules that constrain digital asset movement in a decentralized system.

Dr. Peter Rizun introduces the concept of hardware acceleration with clarity and precision, by “hardware”, he means physical, digital logic circuits that perform well-defined computations, such as SHA-256 hashing or elliptic curve multiplication. These circuits are not general-purpose processors; they are engineered for a single function and are thus radically optimized in both speed and efficiency. By “acceleration”, he refers to the performance gains realized by offloading computation from software, typically executed on general-purpose CPUs, to dedicated hardware. These gains are often measured in terms of reduced clock cycles, lower energy consumption, and smaller silicon footprint. This is important, because the growth trajectory of Bitcoin implies a future where transaction throughput must scale by several orders of magnitude. Dr. Peter Rizun offers a global adoption scenario: five billion users, each executing two transactions per day, implies a steady-state load of 100,000 transactions per second, every one of those transactions must be validated by full nodes, nodes that operate under resource constraints and which are critical to the decentralization, and censorship resistance of the network.

While Bitcoin’s hash function (SHA-256) has already undergone extensive hardware optimization, culminating in application-specific integrated circuits (ASICs) capable of billions of hashes per second, digital signature verification remains a critical bottleneck, this is not merely an inconvenience; it’s a structural limitation that constrains the network’s ability to scale safely, cheaply, and globally. Even more fundamentally, Dr. Peter Rizun notes that Bitcoin’s scripting system, originally intended to offer programmable control over coins, was designed with security and simplicity, not hardware compatibility, in mind. Bitcoin Script is a stack machine with limited expressiveness and non-deterministic resource requirements. This makes it inherently difficult to optimize, let alone translate into a hardware-executed form; it is, in effect, a legacy software abstraction inside what aspires to be a hardware-native financial protocol.

This leads to Dr. Peter Rizun’s core thesis, which he explores in depth over the rest of his presentation:

-

Hardware acceleration is essential for scaling signature validation and other cryptographic operations beyond the limits of traditional software execution.

-

Bitcoin’s scripting model should be redesigned, not merely patched, and a RISC-V-based architecture offers a promising alternative that aligns with modern hardware design principles.

Dr. Peter Rizun’s approach is methodical, he begins by grounding the audience in the fundamentals of digital logic, wires, gates, memory, and control, and then builds up through progressively more complex examples, culminating in an entirely new vision for transaction validation, one that embeds verifiable computation directly in silicon, it is a vision that is at once radical and refreshingly pragmatic.

2. Digital Logic Demystified: The Foundations of Hardware Acceleration

To appreciate the full power and inevitability of hardware acceleration, one must begin not with computers or software, but with wires, switches, and electrons. The fundamental insight Dr. Peter Rizun delivers is deceptively simple: digital logic circuits perform computation passively, continuously, and at the speed of physics—unlike software, which incurs an overhead of interpretation, scheduling, and instruction sequencing.

2.1 What is Hardware Acceleration?

Hardware acceleration refers to the use of physical electronic circuits, typically implemented in silicon to carry out specific computations faster or more efficiently than software running on a general-purpose processor. These circuits are hard-wired to perform a fixed function, such as hashing or arithmetic operations, without the need for a traditional instruction loop. This is in stark contrast to a central processing unit (CPU), which is designed for flexibility, a CPU fetches, decodes, and executes instructions one at a time, using a complex control flow and multiple levels of memory caching and branch prediction. That generality comes at a cost: each computation takes dozens or even hundreds of clock cycles, consumes relatively large amounts of power, and occupies a significant silicon area. In contrast, a hardware accelerator such as an ASIC (Application-Specific Integrated Circuit) or a dedicated hardware module implemented on an FPGA (Field-Programmable Gate Array) executes its computation in fixed, parallel circuits, often requiring just one clock cycle per operation and consuming orders of magnitude less energy per operation. This tradeoff between flexibility and performance underpins the case for hardware acceleration in cryptocurrency, especially in components like SHA-256 hashing and digital signature verification, which are known in advance, unchanging, and computationally intense.

2.2 The Building Blocks of Digital Logic

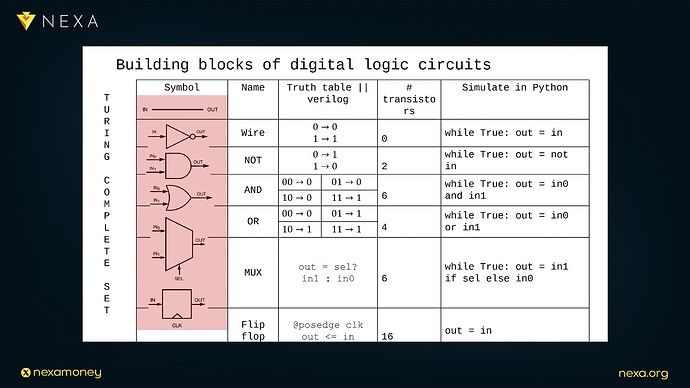

To understand why hardware is so powerful, we must look at the elementary components that make up a digital circuit:

- Wire: The most basic component. A wire is simply a physical path for an electrical signal. If a wire has 0 volts, it represents a logical 0; if it has 1.2 volts (or another high voltage level), it represents a logical 1. Unlike software, which must execute a while-loop to propagate a value from one variable to another, a wire propagates its signal instantly (or more accurately, at the speed of light within the material). It is “always on,” requiring no instruction to activate.

In Dr. Peter Rizun’s analogy, a wire is like a free while-loop in hardware: its output always matches its input, with no overhead.

-

NOT Gate: Also known as an inverter, this component takes a single input and outputs its logical complement. If the input is 1, the output is 0, and vice versa.

-

AND Gate: Outputs 1 if and only if all of its inputs are 1.

-

OR Gate: Outputs 1 if any of its inputs are 1.

-

Multiplexer (MUX): A controlled switch. It routes one of two input signals to the output based on the value of a third “select” signal. MUXes are fundamental to building programmable behavior in hardware.

-

Flip-Flop: A memory element. A flip-flop stores a single bit and updates its output on the rising edge of a clock signal. This allows a circuit to maintain state across clock cycles—an essential property for sequencing and memory.

Together, these components form what is known as a Turing-complete set. In other words, any computable function can be implemented as a circuit composed of these basic gates and flip-flops, this is the hardware analog to a universal programming language: it can compute anything that is logically computable, but does so via static spatial structures instead of dynamic, time-based instruction flows.

2.3 From Bits to Words, From Logic to Computation

In this world of hardware, a bit is the atomic unit of data, a 0 or 1 conveyed by a wire or stored in a flip-flop. A word is a collection of bits, typically 8, 16, 32, or 64, that travel together on a bus (a bundle of wires). Collections of flip-flops are called registers, and these hold entire words of data, ready for computation or storage.

A bus carries words; flip-flops store them. And with just these constructs—wires, gates, flip-flops, buses, and registers—we can build entire processors, crypto accelerators, or even Turing machines.

What makes this paradigm powerful is that everything is happening simultaneously. All the gates in a digital circuit are evaluating their inputs continuously, and their outputs update as fast as the signals propagate often within picoseconds or nanoseconds, there is no loop to be unrolled, no instruction to be fetched, no memory hierarchy to be traversed. This parallelism and immediacy is what gives hardware its overwhelming advantage.

To drive the point home, Dr. Peter Rizun offers a direct comparison: imagine simulating the behavior of a simple wire in Python, you would need a while-loop that reads the input and assigns it to an output, even with an optimized interpreter, this requires hundreds of instruction cycles, a wire in hardware, by contrast, performs the same task instantly, for free.

3. SHA-256 in Silicon: A Case Study in Hardware Efficiency

SHA-256, short for Secure Hash Algorithm 256-bit, is a cryptographic hash function that lies at the heart of Bitcoin’s proof-of-work algorithm and block structure, while originally standardized by NIST and designed for secure message digestion, SHA-256 has become the computational backbone of Bitcoin mining, and, as Dr. Peter Rizun illustrates, a textbook example of why hardware acceleration matters.

3.1 The Anatomy of SHA-256

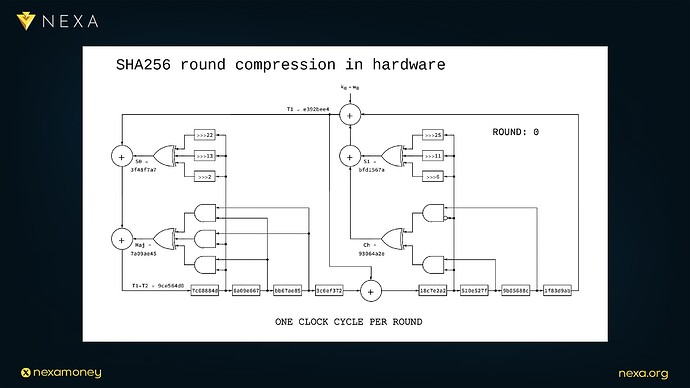

The SHA-256 algorithm takes as input a message of arbitrary length and compresses it into a fixed 256-bit digest, at its core is a compression function that performs 64 rounds of transformation on a 512-bit block of the message, each round applies a sequence of bitwise logical operations, additions, and rotations, all governed by a fixed set of constants and word schedules.

Dr. Peter Rizun zooms in on the inner workings of this compression function, illustrating it as a graph of interconnected digital components:

-

AND gates and XOR gates perform boolean operations on 32-bit words.

-

Rotators shift bits by fixed amounts, essential for avalanche properties in the output.

-

Adders carry out modular addition of 32-bit words.

-

Registers store the intermediate hash state across rounds, comprising eight 32-bit values 256 bits in total.

This layout is deeply amenable to hardware implementation. Because all gates operate concurrently and propagate results almost instantly, the entire round function can be built as a pipeline that completes one full round of SHA-256 per clock cycle. There are no branches, no dynamic control flow, and no need to fetch instructions, just data flowing through gates.

3.2 Software Implementation: The Slow Path

Now consider implementing the same algorithm in software, such as on an x86 CPU, each register becomes a named variable in memory, each gate becomes a line of code, a logical AND, XOR, or shift instruction, to build the SHA-256 feedback loop, a general-purpose CPU must execute dozens of discrete operations per round. To illustrate the performance gap, Dr. Peter Rizun had an AI assistant convert one round of the SHA-256 compression function into x86 assembly, and the result: 54 assembly instructions per round. Since each instruction consumes approximately one clock cycle on a modern processor (more under certain conditions), one round takes 54 cycles, for the full 64-round SHA-256 block, this adds up to 3,456 cycles. This kind of instructional serialization reflects the general-purpose nature of CPUs: they are optimized for flexibility, not throughput. The result is orders of magnitude less efficiency than custom logic.

3.3 Hardware Implementation: The Fast Lane

Contrast this with a custom SHA-256 circuit. In hardware:

-

One round = one clock cycle.

-

Entire 64-round compression = 64 cycles.

-

Transistor count: ~20,000.

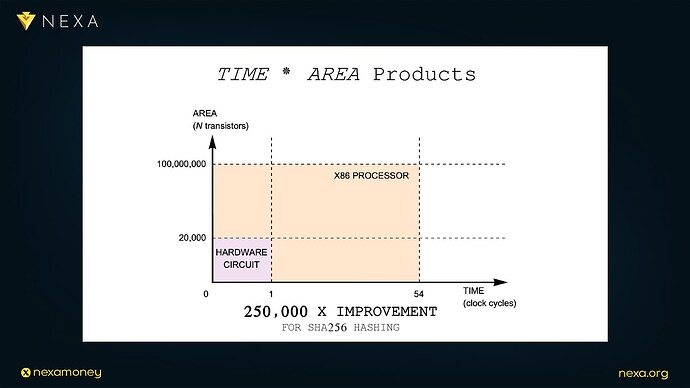

This is not a hypothetical circuit, SHA-256 has been implemented in hardware millions of times in Bitcoin ASICs, embedded devices, and secure hardware modules. The design is simple, elegant, and incredibly efficient when translated into silicon. Dr. Peter Rizun introduces the concept of a time–area product to quantify this efficiency. On a graph with time (in clock cycles) on the horizontal axis and area (in number of transistors) on the vertical axis, hardware occupies a small square: 1 cycle × 20,000 transistors per round, software, by contrast, occupies a much larger rectangle: 54 cycles × 100 million transistors, assuming a modern x86 core.

The result is a dramatic performance-per-area improvement:

Hardware SHA-256 is ~250,000× more efficient than its software counterpart.

This matters, because transistor area and clock cycles are scarce, expensive resources in system design, less area means lower cost per chip and the ability to run many parallel circuits; fewer cycles means lower power consumption and higher throughput.

3.4 Real-World Validation: Mining and Energy Economics

These performance claims are not theoretical, and they have played out in the evolution of Bitcoin mining, when Satoshi Nakamoto first released the Bitcoin client in 2009, it performed SHA-256 hashing on the CPU, a typical desktop machine could perform approximately 100,000 hashes per joule of electrical energy. Today, state-of-the-art ASIC miners (e.g., Bitmain’s S19 series) achieve more than 60 billion hashes per joule, and that’s a 600,000× improvement in energy efficiency, almost entirely driven by hardware acceleration. This dramatic leap came not from new cryptographic breakthroughs or software optimization, but from the industrialization of SHA-256 into custom silicon, this is the most visible example in the cryptocurrency ecosystem of how hardware shapes economic incentives, technical feasibility, and global accessibility. In short, SHA-256 shows us what is possible when critical operations are reimagined in hardware, but, as Dr. Peter Rizun warns, hashing is no longer the bottleneck, the new frontier, the true computational barrier to scaling is digital signature verification.

4. The True Bottleneck: Signature Verification at Scale

While SHA-256 hashing has been industrialized into silicon, digital signature verification remains almost entirely reliant on general-purpose CPUs, yet, as Dr. Peter Rizun explains, this operation is fundamental to the validation of every Bitcoin transaction, each signature must be checked against the transaction data and a public key to ensure its legitimacy, something no node can skip or parallelize without risking protocol violation.

Dr. Peter Rizun proposes a thought experiment to visualize Bitcoin at scale, imagine a globally adopted cryptocurrency, where 5 billion users make 2 transactions per day, that equates to:

-

10 billion transactions/day

-

≈ 100,000 transactions/second (sustained)

If every transaction includes at least one signature, this means a full node must process roughly 100,000 signature verifications per second just to keep up with the global flow of funds, this is before accounting for mempool management, orphan blocks, or advanced contract logic. The current state of affairs is insufficient, a modern high-performance CPU core can perform around 20,000 signature verifications per second, or 50 microseconds per verification, to reach 100,000 verifications per second would require at least five cores running flat out, and realistically more, given I/O overhead and competing processes. Worse, such computational load rules out low-power, embedded full nodes, the very kind of node Dr. Peter Rizun imagines plugged into a router, running silently and autonomously, if full validation requires an entire desktop-class system, decentralization suffers. Lightweight validation must be both affordable and sustainable, especially for the 80% of the world that lacks high-performance hardware.

4.1 The Mathematical Core: Elliptic Curve Signature Verification

Signature verification in Bitcoin uses the Elliptic Curve Digital Signature Algorithm (ECDSA) over the secp256k1 curve, the verification equation boils down to an elliptic curve scalar multiplication:

Q=k⋅GQ = k \cdot GQ=k⋅G

Where:

-

GGG is the generator point of the curve

-

kkk is a secret scalar (derived during signing)

-

QQQ is the resulting curve point (used to validate the signature)

This process involves modular arithmetic on 256-bit integers, a computationally intense operation requiring many intermediate steps:

-

Modular addition

-

Modular inversion

-

Modular multiplication

-

Coordinate transformations

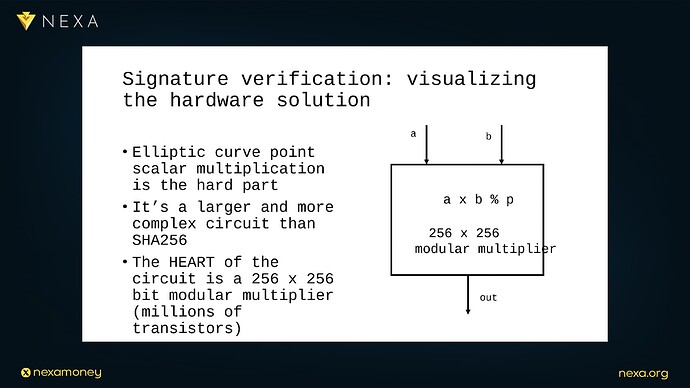

Rizun captures this visually with a metaphor: a “gymnastics team” of workers moving 256-bit numbers through a hardware pipeline. It’s a large, intricate circuit, with one central machine, a 256×256-bit modular multiplier, at its heart, this multiplier consumes two 256-bit numbers per clock cycle and outputs their modular product, computed mod the curve’s prime:

P=(A⋅B)mod pP = (A \cdot B) \mod pP=(A⋅B)modp

Surrounding this multiplier are feeder and catcher circuits, some workers prepare inputs for the multiplier; others post-process the results, doubling or adding curve points as needed, all are orchestrated to keep the multiplier fed at full speed, every nanosecond matters.

4.2 The Hardware Design: Compact, Fast, Purpose-Built

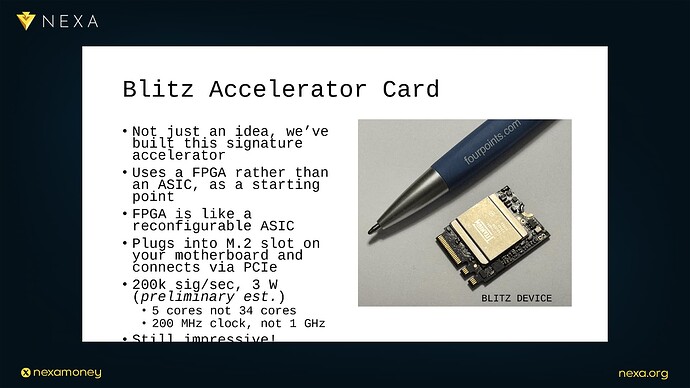

This circuit is complex, yes, but not out of reach, Dr. Peter Rizun estimates that a full elliptic curve signature verifier can be built with about 5 million transistors, a tiny fraction of a modern CPU core, the result: a system that can perform a full signature verification in just 5,000 clock cycles.

At a clock speed of 1 GHz, this translates to:

-

5 microseconds per signature

-

200,000 signature verifications per second per core

Compare that to the general-purpose CPU:

-

50 microseconds per signature

-

20,000 verifications per second per core

-

~100 million transistors per core

The implication is profound: this specialized hardware is 10× faster and 20× smaller, giving a total 200× efficiency improvement in the time-area product, this matters enormously in system architecture, because it defines:

-

Energy use per signature

-

Heat output per chip

-

Total verifications per dollar of silicon

4.3 Why It Matters: Nodes, Not Miners

Crucially, this isn’t about mining, it’s about validation, the foundational responsibility of full nodes, hardware-accelerated verification doesn’t change Bitcoin’s security assumptions; it merely makes full validation more accessible, less costly, and dramatically more scalable. In Dr. Peter Rizun’s vision, anyone should be able to run a node on a tiny chip, plugged into a router, powered over USB, and silently verifying the global Bitcoin ledger, this is only possible if we offload expensive cryptographic operations like signature verification to dedicated, massively efficient silicon. In the next section, Dr. Peter Rizun explores just how small and inexpensive such systems could be, by asking a simple but genius question: Can we validate the world’s Bitcoin transactions on the head of a pin?

5. Verifying the World’s Transactions on the Head of a Pin

In this section of his presentation, Dr. Peter Rizun shifts from theoretical efficiency to a startling physical realization: global-scale Bitcoin validation could literally fit on the head of a pin, this isn’t a metaphor, it’s a direct consequence of silicon scaling, transistor density, and the radical efficiency of hardware-accelerated cryptographic logic.

5.1 The Physical Reality of Modern Silicon

Dr. Peter Rizun begins with a practical question: How much logic can we pack into a square millimeter of silicon using modern fabrication processes?

The answer, using TSMC’s 5nm process (the same used in advanced mining ASICs), is approximately:

- 170 million transistors per mm²

This figure defines the transistor density, a key constraint in chip design, which determines how much functionality you can integrate into a physical die, with his earlier estimate that each signature verifier core requires ~5 million transistors, Dr. Peter Rizun does the simple division:

170 million ÷ 5 million = 34 verifier cores per mm²

That is, a single square millimeter of silicon can host 34 parallel signature verifier cores, each running independently, and each of these cores, thanks to its highly optimized design, can verify roughly:

- 200,000 ECDSA signatures per second

So the total throughput per mm² is:

34 × 200,000 = 6.8 million signature verifications per second

This number is nearly 70× the transaction load of a globally adopted Bitcoin network (which we estimated earlier at 100,000 tx/sec), that means, in principle, a die the size of a pinhead could handle global Bitcoin validation on its own, with ample headroom.

5.2 The Economics of Validation at Scale

Of course, building an ASIC (application-specific integrated circuit) is not free, Dr. Peter Rizun explores the cost using current fabrication economics:

-

TSMC charges approximately $18,000 per wafer

-

A 300mm wafer yields ~50,000 chips, assuming each chip is 1 mm²

-

That yields a per-chip cost of ~$0.36

So for less than 40 cents, one could fabricate a chip capable of validating every single Bitcoin transaction on Earth, continuously, in real time. Naturally, this estimate doesn’t include non-recurring engineering (NRE) costs, such as design validation, tape-out, mask generation, or testing, these upfront costs can be in the millions of dollars, but they are fixed, once paid, they amortize quickly over volume, and the marginal cost of each additional chip drops to cents. This leads to a remarkable insight: Validation, at scale, is not constrained by cost, energy, or physical space, it is constrained by system architecture and software compatibility, in particular, by how well Bitcoin’s validation logic maps onto hardware.

5.3 The “Node on a Chip” Vision

If the world’s validation needs can fit on a pinhead, what could a Bitcoin full node look like in this hardware future?

Dr. Peter Rizun sketches an elegant, low-power vision:

-

A small, ASIC-powered device with signature cores, SHA-256 hashing blocks, and minimal RAM

-

Plugged directly into a router or home server

-

Accessible via SSH, running quietly and passively, consuming only a few watts

This is a radical shift from today’s node infrastructure, current Bitcoin Core nodes require full desktop-class CPUs, ample RAM, and terabytes of storage, they must be manually managed, upgraded, and powered by grid electricity.

By contrast, a node-on-a-chip is:

-

Eco-friendly (micro-watt-level power use)

-

Decentralization-friendly (no high-end hardware required)

-

Always-on and resilient

This would fulfill one of Bitcoin’s deepest design ideals: making validation universally accessible, instead of relying on third-party APIs or trusting remote infrastructure, users could run a full node in their home, or in their pocket.

Dr. Peter Rizun is careful to note a key caveat: while computation is now trivial, there remains a harder problem, access to state, specifically the Unspent Transaction Output (UTXO) set, this data must be kept accurate, synchronized, and quickly accessible during validation, even with fast computation, a slow or fragmented UTXO database can become the new bottleneck. Thus, future scaling work must not only embrace hardware acceleration but also rethink data access and memory hierarchy, nevertheless, the computational foundation is secure: hardware validation is no longer an aspiration, it is, effectively, a solved problem.

6. Why Bitcoin Script Holds Us Back

After demonstrating the enormous gains available through hardware acceleration, culminating in the image of a global verifier system on a pinhead, Dr. Peter Rizun shifts his attention to a more difficult, less tangible challenge: Bitcoin’s execution model, while hashing and digital signatures lend themselves well to hardware implementation, Bitcoin Script does not, this is not merely a performance concern; it is an architectural barrier that threatens to undermine the full potential of scalable, decentralized finance.

6.1 Stack-Based Simplicity, Hardware-Hostile Execution

Bitcoin Script was originally designed with security and minimalism in mind, as a deliberately non-Turing-complete language, it avoids loops and recursion to prevent denial-of-service attacks and unbounded execution, it uses a stack-based execution model, a design that is familiar from Forth and other low-level languages, scripts operate by pushing and popping data from the stack, using simple opcodes to compare hashes, verify signatures, and enforce spending conditions. From a security perspective, it’s elegant; from a hardware engineer’s perspective, however, it’s anything but.

Why? Because stack machines are inherently:

-

Difficult to pipeline: Each operation depends on the stack’s current state, making it hard to schedule instructions efficiently in hardware.

-

Challenging to analyze statically: Optimizing or translating scripts into circuits requires knowing their structure ahead of time, hard to do when control flow is based on stack dynamics.

-

Non-modular: There’s no support for reusability, abstraction, or function calls, every script is standalone, limited in what it can express.

In short, Bitcoin Script is not “compile-to-silicon” friendly, unlike SHA-256 or ECDSA verification, which map naturally to logic gates, Bitcoin Script is hostile to hardware acceleration, even high-performance CPUs struggle to optimize Script execution; building efficient ASICs for it is nearly impossible.

6.2 The Case for a RISC-V Transition

Recognizing this, Dr. Peter Rizun proposes a radical but well-reasoned alternative: replace Bitcoin Script with RISC-V, a modern, open instruction set architecture (ISA), developed as an academic and industrial collaboration, it is:

-

Formally specified, with a clean modular design

-

Turing-complete, yet compact and minimal

-

Friendly to both compilers and hardware synthesis tools

-

Open-source and royalty-free, ideal for cryptographic ecosystems

Instead of executing Bitcoin Script opcodes on a virtual stack machine, transactions would include RISC-V “programs”, small snippets of compiled assembly code that define their own spending conditions, these programs would be run by a RISC-V core, either in software or in hardware, on each validating node. More crucially, these programs would operate on a well-defined memory model, using registers and linear memory instead of a stack, this makes it possible to:

-

Compile higher-level languages down to verifiable, efficient bytecode

-

Create reusable code segments, e.g. standard signature checks or quantum-safe scripts

-

Offload transaction logic to hardware: just like we built hardware for SHA-256 and ECDSA, we could now build circuits that execute whole spending programs.

It enables formal verification, reproducible builds, static analysis, and, eventually hardware-native validation for arbitrary transaction conditions.

6.3 Challenges and Open Questions

Of course, such a shift raises substantial questions:

1. Consensus Compatibility

Bitcoin Script is part of Bitcoin’s consensus rules, changing it would require a hard fork, a potentially contentious and politically risky move, any transition would have to be opt-in, staged, and carefully engineered to avoid splitting the network.

2. Security and Sandboxing

RISC-V is powerful, but also dangerous, turing-completeness reintroduces the halting problem: How do we prevent infinite loops or resource exhaustion? Dr. Peter Rizun suggests a mechanism akin to Ethereum’s “gas”, but in hardware: if a transaction’s program takes too long, it can be paused, serialized, and retried or discarded, the execution context, registers, and memory can be checkpointed into the transaction’s metadata.

3. Tooling and Ecosystem

A RISC-V-based scripting model would require new tools: compilers, interpreters, debuggers, static analyzers, and more, while the RISC-V ecosystem is growing rapidly, integrating it into Bitcoin’s security model would be a substantial engineering task.

4. Governance and Adoption

Even if technically sound, such a proposal faces political headwinds, Bitcoin’s development process is conservative by design; radical changes, especially ones that affect consensus-critical code, are scrutinized heavily and adopted slowly. Yet Dr. Peter Rizun’s core point stands: Bitcoin Script is the last major computational component not yet amenable to hardware acceleration, and if we want to bring the entire transaction validation pipeline into silicon, Script must evolve, and RISC-V provides a credible, forward-compatible path.

7. Full Hardware Validation: Bringing Bitcoin Transactions into Silicon

As Dr. Peter Rizun transitions from individual cryptographic primitives to full transaction processing, he broadens the scope of hardware acceleration from isolated components (like hashing and signature checking) to the validation of entire Bitcoin transactions in hardware, this represents a paradigm shift: rather than treating validation as a set of loosely coupled software modules, the entire validation flow could be expressed in silicon, achieving dramatic gains in throughput and energy efficiency.

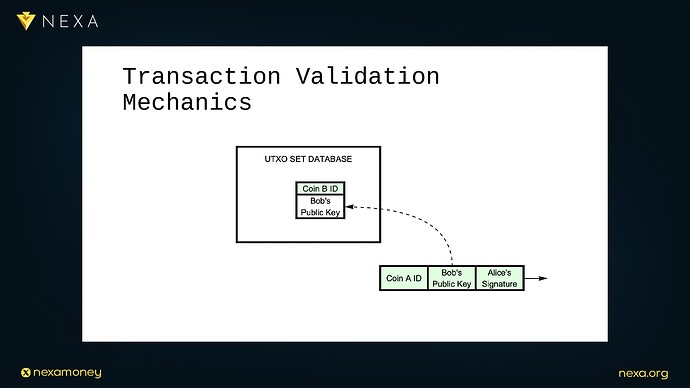

7. 1 Transaction Mechanics: From Paper to Silicon

To motivate this vision, Dr. Peter Rizun revisits the basic mechanics of a Bitcoin transaction, as described in Satoshi Nakamoto’s whitepaper, when Alice wants to send a coin to Bob, she crafts a transaction that references the ID of the coin she owns, includes Bob’s public key, and provides her signature over the coin ID and destination key, the transaction does not explicitly include Alice’s public key or the preimage hash, these are reconstructed during the verification process by the recipient node.

In a conventional software node, this process entails:

-

Checking the UTXO set to verify that the input coin exists and is unspent.

-

Reconstructing the public key and hash that should have been signed.

-

Verifying the signature using ECDSA or Schnorr algorithms.

-

Appending the result to the mempool or rejecting it.

Dr. Peter Rizun’s argument is that every one of these steps can be expressed as a hardware circuit, composed of logic gates, multiplexers, flip-flops, memory buses, and instruction schedulers.

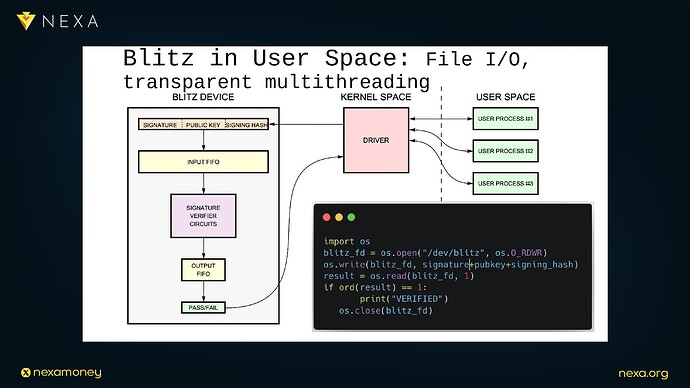

7.2 Hardware Transaction Validation Pipeline

He describes how this might work in practice:

-

Upon receiving a transaction, the hardware validation engine first queries the UTXO set, either from local SRAM or a specialized coprocessor.

-

It uses the returned public key to reconstruct the signing hash.

-

It formats the signature check payload and streams it into a dedicated signature verification circuit (such as the one used in the Blitz prototype).

-

The result of the signature check is routed back into a validation register bank.

-

Finally, if the check passes, the transaction is accepted and its outputs are written back to the UTXO set.

This pipeline structure has several benefits:

-

Low latency: Each stage can operate at nanosecond scales.

-

Parallelism: Multiple validation pipelines can operate simultaneously, constrained only by chip area and power budget.

-

Determinism: Hardware circuits behave in well-defined ways under clock control, eliminating the timing non-determinism that plagues general-purpose computing.

7.3 The Implication: Script Is the Barrier

Dr. Peter Rizun points out that, conceptually, this approach matches the intent of the original Bitcoin white paper more closely than the actual implementation, Satoshi’s design was modular, relying on public key cryptography and hashing, the eventual inclusion of a programmable stack machine (Bitcoin Script) complicated the model significantly in ways that are not hardware-friendly. Hardware validation offers an opportunity to return to that original simplicity, with the added benefit that the system can now operate at global scale with commodity power and silicon costs.

However, there’s a catch, while basic transactions (as described in the white paper) are straightforward to implement in hardware, Bitcoin Script introduces substantial complexity that resists such clean mapping, this motivates the next section of Dr. Peter Rizun’s talk, why Bitcoin Script, though ingenious for its time, has become a technical liability in a hardware-accelerated world.

8. Script vs. Silicon: The Problem with Bitcoin’s Stack Machine

While the original Bitcoin protocol architecture aimed for simplicity, flexibility, and decentralization, its scripting language, Bitcoin Script, has become a major bottleneck for scaling via hardware acceleration. In this section, Dr. Peter Rizun highlights the weak points of the design of Bitcoin Script from the perspective of hardware efficiency, revealing how the very qualities that made it lightweight and flexible in 2009 now render it hostile to modern silicon optimization.

8.1 The Stack Machine Model: Elegant but Rigid

Bitcoin Script is a stack-based, interpreted scripting language, it was designed to be simple enough to prevent infinite loops or excessive computational cost (e.g., by excluding constructs like unbounded loops and recursion), while being powerful enough to express most conditions under which coins can be spent, it uses a postfix notation, where instructions operate on a Last-In-First-Out (LIFO) stack.

For example, to verify a digital signature, a typical scriptPubKey might push a public key and use the OP_CHECKSIG operation to verify the validity of a signature against the transaction data.

This model is intentionally minimalistic and that minimalism has benefits:

-

Deterministic execution paths.

-

Simple opcode set.

-

Easy manual verification.

But it also introduces serious limitations, particularly when one tries to express or optimize these operations in hardware.

8.2 Why Stack Machines Are Hardware-Hostile

Dr. Peter Rizun argues that stack machines are fundamentally misaligned with modern hardware design principles. Specifically:

-

Lack of Parallelism:

Stack execution is inherently sequential, each opcode depends on the top elements of the stack, and one cannot easily predict or prefetch future operands, in contrast, hardware acceleration thrives on predictable dataflow and static scheduling. -

Dynamic Typing and Control Flow:

Bitcoin Script is dynamically typed and only resolved at runtime, in hardware design, however, predictability and determinism are paramount, without the ability to statically analyze control flow or allocate registers at compile-time, hardware must either over-provision resources or resort to runtime microcontrollers, both of which negate the benefits of custom silicon. -

Limited Compiler Support:

Hardware-friendly instruction sets (such as RISC architectures) benefit from mature compiler toolchains that can emit optimized logic-level code. Bitcoin Script has no formal compiler pipeline, no standard intermediate representations, and very limited toolin, making it difficult to target with HDL (hardware description language) compilers or synthesis tools. -

No Native Memory Model:

Bitcoin Script’s memory model is extremely constrained, aside from the stack and a small set of temporary variables, there’s no support for direct memory access or linear address space; hardware, on the other hand, expects a memory interface ,buses, registers, address decoding, and more.

8.3 The Result: A Dead-End for Silicon Design

In practice, these limitations mean that Bitcoin Script resists pipelining, parallelization, and logic synthesis. Trying to physically implement the full scripting engine in hardware (as Dr. Peter Rizun attempted) becomes a combinatorially complex challenge, akin to embedding an interpreter inside a circuit, the result is neither efficient nor scalable. Even worse, any attempt to optimize or extend Script (e.g., to support introspection or new signature schemes) runs up against the rigid boundaries of its opcode set and virtual machine model. What was once a clever safeguard against DoS attacks has become a barrier to the very kind of performance gains the network now desperately needs.

8.4 The Implicit Thesis: Scripting Must Evolve

Dr. Peter Rizun’s analysis leads to a pointed conclusion: if Bitcoin is to scale with the help of hardware acceleration, it must abandon its stack machine in favor of something hardware-friendly, modular, and formally analyzable, that “something”, he argues, is RISC-V, an open, extensible instruction set designed from the ground up for physical implementation. This pivot sets the stage for a radical redesign of Bitcoin’s programmability model, one that aligns with the physics and economics of silicon rather than the limitations of interpreter logic.

9. RISC-V as a Hardware-Friendly Scripting Alternative

Having laid bare the limitations of Bitcoin’s current scripting model, Dr. Peter Rizun offers a compelling and concrete alternative: replacing Bitcoin Script with a subset of the RISC-V instruction set architecture (ISA), this is not a casual suggestion, it is a deep proposal to rethink how Bitcoin’s smart contract layer interacts with the underlying silicon, trading interpretive stacks for physical circuits and open, verifiable compute.

9.1 What Is RISC-V?

RISC-V (pronounced “risk five”) is an open-source, modular ISA based on reduced instruction set computing (RISC) principles. It was developed at UC Berkeley and has since gained broad industry adoption due to its flexibility, clean design, and royalty-free licensing. Unlike proprietary architectures such as x86 or ARM, RISC-V can be freely implemented in hardware, customized for specific use cases, and formally verified for correctness.

RISC-V has several properties that make it especially attractive in the context of Bitcoin scripting:

-

Fixed-length instructions (32 bits), which simplifies decoding.

-

Simple, orthogonal instruction set, making it easy to implement in hardware.

-

Mature tooling, including GCC and LLVM compiler support, formal specifications, and test suites.

-

Formal verifiability, which aligns with Bitcoin’s ethos of deterministic, trustless computation.

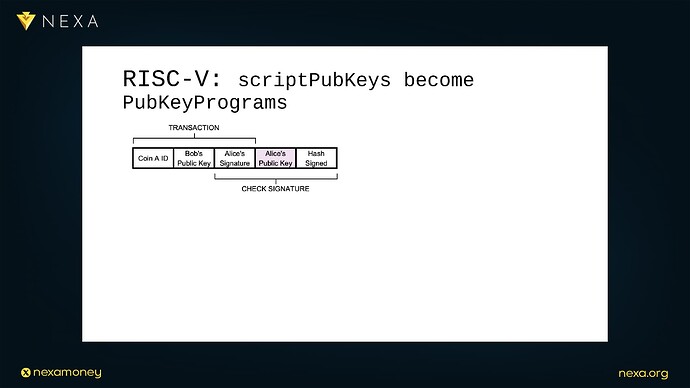

Dr. Peter Rizun’s idea is to replace Bitcoin Script’s interpreted execution model with native RISC-V programs embedded directly in the transaction data; these programs define spending conditions using real, low-level instructions rather than a constrained stack language.

9.2 Pubkey Scripts Become Pubkey Programs

In this reimagined system, instead of having a scriptPubKey that pushes a public key and invokes OP_CHECKSIG, a Bitcoin output would contain a RISC-V program, called a “pubkey program”. This program would be executed in a RISC-V virtual machine (or circuit) with access to the transaction data as its addressable memory space.

When a transaction input attempts to spend a coin, the validation engine does the following:

-

Loads the pubkey program from the UTXO set.

-

Maps the transaction and signature data into the RISC-V machine’s memory space.

-

Begins executing the program with the program counter set to 0.

-

Monitors for successful termination (valid signature) or failure (invalid logic, timeouts, etc.).

This structure brings significant clarity and modularity; instead of trying to cram more logic into the limited Bitcoin Script engine via hard forks or soft forks, developers would be writing real, well-defined programs in a standardized assembly language that can be verified, debugged, and optimized like any embedded firmware.

9.3 Why This Is So Powerful for Hardware

Where Bitcoin Script fights the grain of silicon, RISC-V flows with it:

-

Hardware-native: Because RISC-V is designed to be implemented directly in hardware, the same circuits that execute transaction scripts could be physically laid out on-chip, turning logic into literal gates and wires.

-

Static analysis and pipelining: RISC-V programs can be statically analyzed and compiled into pipelines that achieve nanosecond-scale timing.

-

Formal verification: The entire ISA can be modeled in formal logic, allowing for mathematical proofs of correctness.

-

Isolation and modularity: The instruction set is modular; only a small subset is needed for Bitcoin use cases, making the trusted computing base minimal.

These properties unlock a whole new era of programmable transaction validation, where developers, protocol designers, and chip architects can work together on a shared, open standard.

9.4 RISC-V Is Already Proven

This proposal isn’t happening in a vacuum, RISC-V is already used in real-world products ranging from microcontrollers to supercomputers, blockchain projects like Ethereum 2.0, Nervos, and Mina have also explored or adopted RISC-style VMs for smart contract execution. In Dr. Peter Rizun’s vision, Bitcoin could do something even more radical: make RISC-V the native scripting language, not just in software, but all the way down to the transistor level.

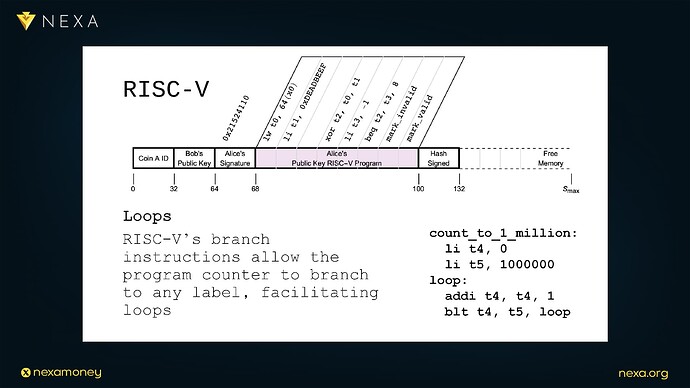

10. Execution Environment in Transactions: Memory Mapping and Stateful Programs

To realize the full potential of RISC-V as a Bitcoin scripting replacement, Dr. Peter Rizun introduces a radical idea: reimagining a Bitcoin transaction not just as a static data structure, but as a stateful execution environment, this section explores how transactions and validation can become part of a memory-mapped RISC-V program — moving from passive records to active computation substrates.

10.1 Memory-Mapping the Transaction

The key insight is that a RISC-V machine doesn’t need to operate in a vacuum, instead, we can construct a virtual memory model where the entire transaction, including inputs, outputs, scripts, signatures, and auxiliary data is treated as a contiguous block of memory addressable by a validating RISC-V processor.

In this design:

-

The transaction begins at address 0x00000000.

-

The signature to be verified may reside at offset 0x00000040.

-

The public key might sit at 0x00000060.

-

The pubkey program (RISC-V instructions) begins at a defined offset, say 0x00000100.

The transaction therefore serves as both data and code, a self-contained executable package.

10.2 Transactions as Executable Programs

When a Bitcoin node validates an input, it doesn’t merely interpret a script, it loads and runs a transaction-specific RISC-V program. This program:

-

Reads from the memory-mapped transaction to extract its inputs.

-

Performs arithmetic, logical operations, and cryptographic checks.

-

Branches conditionally based on whether the spending conditions are met.

-

Writes back to memory if needed (within sandboxed regions).

-

Terminates with a pass/fail result indicating script success.

This model allows for complex computation, introspection, and logic to occur within the boundaries of a transaction, all executed deterministically and in isolation, it’s similar in spirit to how a Linux process operates on its own virtual address space, except here, it’s a trustless VM.

10.3 A Toy Example: SillySig

To make this more concrete, Rizun offers a “SillySig” example — a toy signature scheme where a signature is valid if it matches a hardcoded value, such as all zeros, or if the sum of its bytes equals a predetermined number. The goal isn’t security, but to demonstrate how a validation rule can be enforced entirely by a RISC-V program operating on memory-mapped transaction data.

11. RISC-V Capabilities: Introspection, Storage, Function Calls

Having established how transactions can become memory-mapped RISC-V execution environments, Dr. Peter Rizun now unpacks the advanced capabilities this model enables. What Bitcoin Script treats as exotic or outright impossible, introspection, dynamic storage, modular logic becomes not only natural in RISC-V, but efficient and silicon-friendly.

11.1 Transaction Introspection: Native and Transparent

In Bitcoin Script, introspection is awkward, a script cannot easily inspect the full transaction that encloses it, as a result, script authors rely on hacky tricks, opcode proposals, or convoluted pre-image commitments, RISC-V, on the other hand, makes transaction introspection trivial.

Because the entire transaction is treated as addressable memory, a RISC-V program can inspect its own structure freely:

-

Want to read the n-th input? Load from offset 0x00XYZ.

-

Need to verify the size of the output array? Just dereference a known memory location.

-

Interested in checking your own signature or pubkey? Load from where the validator expects them to be.

This provides a radically simple and general mechanism for building rules that depend on transaction metadata, like time locks, coin age, fee levels, or mempool-specific logic, no soft fork needed. No opcode additions. Just memory.

11.2 Linear Storage and Mutable State

Bitcoin Script is stateless, once a script runs, its memory is gone, there’s no way to maintain internal counters, flags, or history across executions without external constructs. By contrast, RISC-V allows transaction validators to read and write to pre-allocated memory regions within their transaction sandbox:

-

Store intermediate hash results.

-

Keep loop counters.

-

Encode multi-stage logic (e.g., iterated hashing or signature aggregation).

For instance, if a signature verification requires computing multiple modular inverses, the results can be cached in memory rather than recomputed from scratch, boosting performance and opening new logic patterns. This doesn’t make RISC-V transactions globally stateful, but it provides rich local state to each script, fostering smart behavior while preserving determinism.

11.3 Function Calls and Modular Code Reuse

Another powerful feature: RISC-V supports function calls via JAL (jump and link) and RET instructions, this unlocks modular program structure and, more importantly, code reuse across transactions. Imagine a quantum-resistant signature scheme with a 20 KB verification program. It would be prohibitive to include that much code in every transaction. But with RISC-V:

-

The verification logic can be stored in a read-only UTXO.

-

The pubkey program in a new transaction can JAL into that UTXO’s code.

-

Execution jumps into the UTXO’s memory space, runs, then returns.

This offers a natural model for shared libraries:

-

One-time write: heavy logic stored once on-chain.

-

Many-time reference: individual transactions that jump into it.

-

Guaranteed immutability: code can’t be tampered with once embedded in a UTXO.

Bitcoin Script simply can’t do this, but RISC-V enables a clean, elegant way to share, audit, and scale complex logic, from covenants and signature schemes to user-defined contract standards.

11.4 Turing Completeness, Loops, and the Halting Problem

With loop constructs (BEQ, BNE, JAL, etc.), RISC-V introduces full Turing completeness to transaction scripts. This means:

-

Arbitrary logic can be expressed.

-

Programs can count, iterate, and recurse.

-

Transaction validation can simulate any computable process.

Of course, this raises the halting problem: what if a transaction runs forever? Dr. Peter Rizun’s earlier pause-and-snapshot solution ensures that validation remains safe:

-

Nodes can cap CPU cycles and preempt slow scripts.

-

Full machine state can be saved, allowing for retry or continuation.

-

Transactions become not just code, but migratable processes in the network.

This makes the Bitcoin network more like an operating system, managing workloads, scheduling execution, and enforcing fairness, all while preserving full determinism.

12. Conclusion: From Interpreters to Circuits — Designing for the Next Billion Users

Dr. Peter Rizun’s talk charts a journey, from wires to logic gates, from SHA-256 to full transaction execution, and from Bitcoin Script to a RISC-V powered vision of smart, scalable validation, his thesis is bold but grounded in physics and engineering reality: if cryptocurrency is to scale to billions of users, it must move from interpreting scripts on general-purpose CPUs to executing logic directly in silicon.

12.1 Summary of the Hardware Advantage

Across every technical layer, Dr. Peter Rizun shows just how profound hardware acceleration can be:

-

SHA-256 compression: Reduced from 54 cycles to 1 per round in hardware, yielding a 250,000× time-area product improvement over software.

-

Signature verification: Down from 50 μs to 5 μs, with ~200× gains using a 5M transistor custom circuit.

-

Validation at scale: 170 million transistors fit on a 1 mm² chip using 5 nm fabrication, enough for 34 verifier cores doing 200k signature checks/sec each.

-

Result: 6.8 million verifications per second on the head of a pin.

It is already a reality for Bitcoin mining (e.g., ASICs for SHA-256), and Dr. Peter Rizun’s Blitz project proves that the same transformation is possible for signature checking, and beyond.

12.2 RISC-V: A Hardware-Friendly, Open-Source Future

Bitcoin Script was innovative in 2009, but it’s showing its age, its stack-based design, lack of modularity, and hardware-hostile structure limit its future, RISC-V, by contrast, is:

-

Open-source and standardized

-

Silicon-native, with formal semantics

-

Efficiently emulatable and synthesizable into hardware

-

Memory-addressable, register-rich, and function-capable

By embedding RISC-V programs into transactions, we gain a path toward true programmable validation, where logic can be reused, reasoned about, and scaled, not only in software nodes, but also in hardware nodes and routers.

12.3 A Call to Builders

If there is one message Dr. Peter Rizun leaves us with, it’s this:

Silicon is inevitable.

Just as the printing press spreads ideas by minimizing the cost of reproduction, specialized silicon will spread consensus by minimizing the cost of validation, to get there, we need:

-

Hardware engineers designing crypto circuits

-

Protocol designers envisioning modular, memory-safe architectures

-

Core developers willing to rethink legacy assumptions about Script

-

Toolmakers who bridge the gap between cryptographic intent and hardware description

Cryptocurrency will not scale through ever-larger data centers or megacores, it will scale when validation becomes ubiquitous, silent, cheap, and everywhere.

Closing Thought

“We can verify the world’s transactions on the head of a pin… but only if we rethink how we compute.”

Dr. Peter Rizun is offering a new philosophy of design, one where code is not interpreted, but synthesized, where validators are not daemons, but circuits, and where money scales not by centralization, but by efficiency encoded in silicon itself.